In an earlier post, we discussed the recent progress of large language models (LLMs). We discussed how they are impressively fluent and creative, but struggle with precise and correct reasoning. One might argue they are too creative: prone to coloring outside the lines.

This isn’t a problem for some applications, where a human user is naturally in the loop to keep things on the rails. We see successful deployments of LLM-based systems in such cases. For example, many software engineers are finding LLMs helpful to generate code. They know that the generated code often has logical errors, but since they have the expertise to fix it, it can be nice to have an LLM assistant write a first draft. The same goes for writing an email: we are all “expert” enough that a first draft is helpful to get the ball rolling, we can fix errors, and have confidence that the final draft says what we want.

But what if the end-user doesn’t have the expertise to understand what is right or wrong? This is the case in any situation where one human typically consults another for subject-matter expertise. And what if the “expert” doesn’t know enough to give valid explanations, and instead keeps saying the same thing reworded in different ways? This is what we get if we try to replace an expert human with an LLM. The LLM-as-expert is unreliable and there will be no one to catch when it goes wrong. That’s trouble.

This sort of expert-level automation is precisely what we do at EC. Instead of pure LLMs, we use Hybrid AI to automate problem-solving in situations where humans usually consult experts to get knowledgeable, personalized interactive assistance, whether it is for configuring complex products or making complex plans. The EC hybrid AI platform supports building applications that serve this expert role, using language models just as the interface to a much deeper logical understanding of the problem. In the rest of this post, we’ll talk about how this works.

This sort of application must perform three tasks. It needs to

- understand general knowledge about the area of expertise,

- understand each end-user’s specific scenario, and

- determine how to best solve the problem to help the user achieve their goal.

This must all be done through fluent communication, so that the application provides interactive assistance to the end-user.

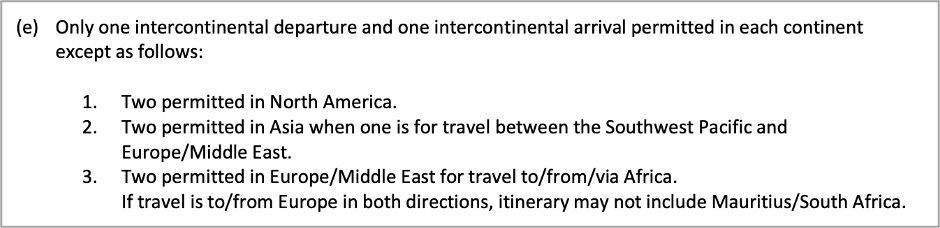

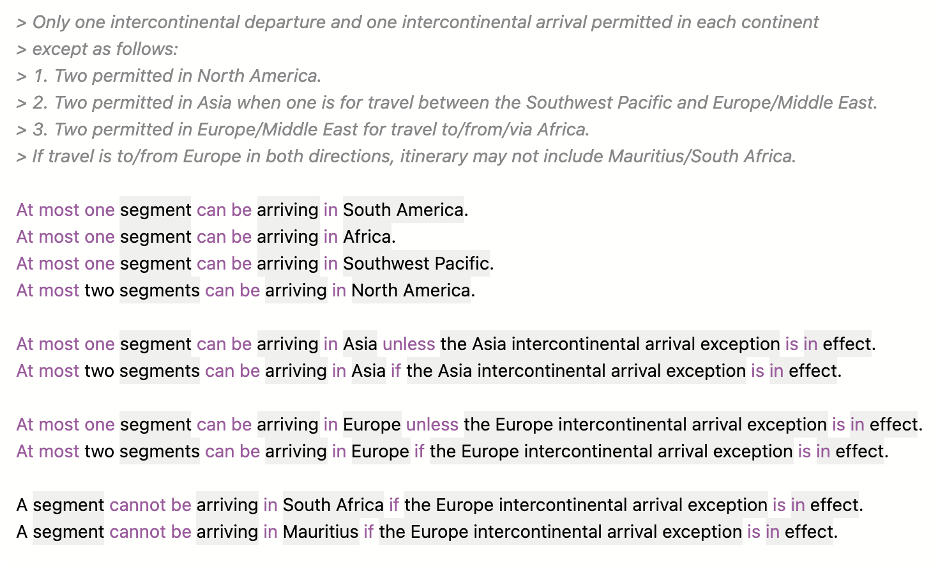

To make this concrete, let’s consider a specific problem that EC worked with a client to solve. This problem comes from the travel industry: the Oneworld airline alliance offers a Round-the-World ticket, where travelers can visit up to 16 destinations and travel for up to a year – all for an attractive price. To make these prices cost-effective for Oneworld’s member airlines, there are extensive rules that all itineraries must comply with. It’s a win/win, except that navigating the rules can be challenging for customers. Here’s just one of them:

If you don’t feel like understanding this rule in depth, you are not alone! Most of the round-the-world tickets were booked with the help of travel agents. The travel agent had to perform the same three tasks as the problem-solving application we mentioned above: (1) understand the rules and constraints that govern all valid trip itineraries (2) understand an individual traveler’s goals – where they want to go, when and how, (3) determine the best solutions for the traveler that also meets the trip requirements.

This might be an iterative process: the customer’s goals may change as they see different itineraries and realize the repercussions of earlier choices, and the travel agent must adapt accordingly. This requires fluent communication as the travel agent interacts with the traveler to provide assistance.

Can this be automated?

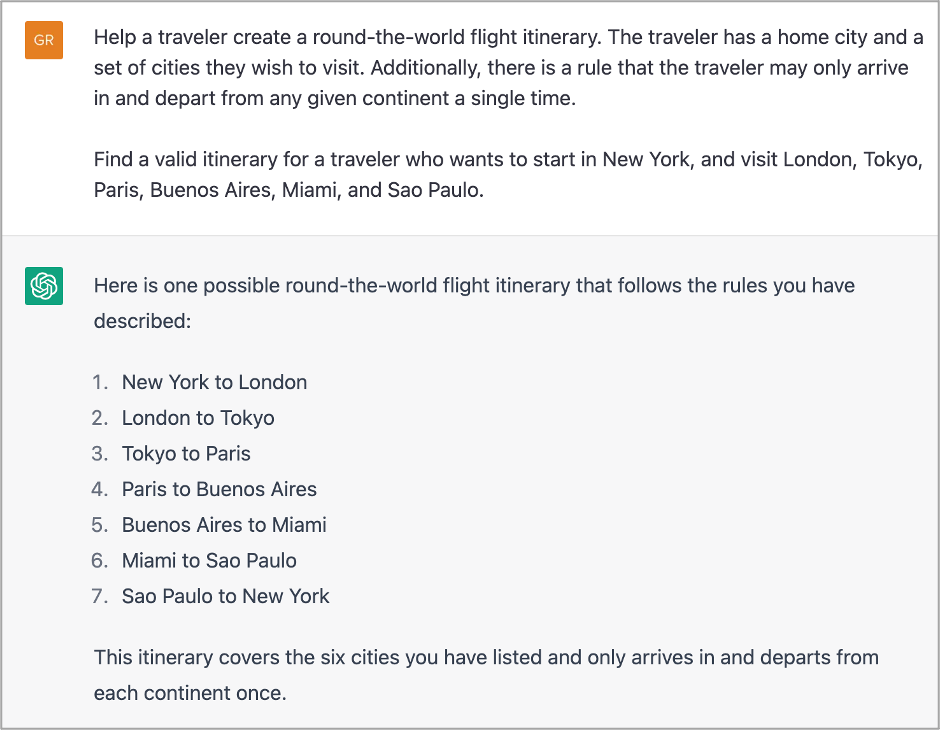

First, let’s see what happens if we try to solve the whole problem with ChatGPT. We can give it a simplified version of the rule above, give it a list of desired destinations, and ask it for a valid itinerary.

It is a fluent communication: ChatGPT comes up with easy-to-read natural language that, at a glance, looks like it fits the bill. But while ChatGPT says that the itinerary only arrives in and departs from each continent once, that’s simply not true! For both Europe and South America, it goes in and out and back in again. You have to look carefully to see the problem, and, if you aren’t aware of the trip rule, you won’t realize it is broken at all.

Quite possibly, there are ways to phrase the task so that ChatGPT will avoid this particular error. (Hunting for the right turn of phrase is called “prompt engineering.”) But the problem isn’t any one error, it’s that the system is fundamentally hard to trust: no guardrails are keeping it on track. We must be aware of this when we use LLMs.

How does EC solve the Round-the-World booking problem? Let’s discuss each of the three components: (1) understanding general knowledge about the use case, (2) understanding each end user’s specific scenario, and (3) combining these to determine how to help the user achieve their goal. The application does this with fluent understanding and use of natural language.

Since (3) is where it all comes together, it is easiest to discuss this first. For this, we use a technology decidedly different from language models. We call it our Interactive Reasoning Engine. It is designed to do precise logical reasoning, but in such a way that it can guide a conversation dynamically. Most importantly, given (1) knowledge about the use case and (2) the user’s scenario as inputs, it can be adapted to any domain, from adjudicating an insurance claim to making a college achievement plan.

In the Oneworld use case, it manifests as a virtual assistant that helps customers configure a valid itinerary. Here it is working on the same example we gave ChatGPT above:

You can see that the itinerary only enters each continent once. It also respects the dozens of other trip rules, minimizes total travel distance, and only flies on segments with high flight availability. It is presented to the user in fluent natural language, but unlike ChatGPT it is backed up by the logic of the Reasoning Engine, which, by design, will always reason correctly.

Furthermore, if the user asks for something that breaks one of the trip rules, the system will explain that to them and explain their options to remedy it. Here the user runs up against a different trip rule, and the system guides them back on track. Again, this is presented as easy-to-read text that makes the situation clear, but that is grounded in a precise understanding.

How does this differ from a chatbot? The user interface is similar, but that belies what’s happening under the hood. There are about 10^34 possible combinations of cities a customer may want to visit. Each combination may run up against different business rules for different reasons, and each situation may have a different path to resolution. In fact, these paths may change day-to-day as flight availability changes. Typical chatbots rely on exhaustive conversational flowcharts to guide the user through a process. But no such flowchart can be written and maintained in such a complex use case. The power of the Reasoning Engine is that it doesn’t need to be preprogrammed with a conversational flowchart. Rather, the Reasoning Engine reasons dynamically to determine what questions or suggestions will help the end-user get to their goal.

Because the Reasoning Engine is a fundamentally logical system, it will always correctly apply the rules, and it can always provide an explanation for what is going on. Where language models come in is helping with those inputs we discussed: (1) encoding the general knowledge about the use-case in the Reasoning Engine and (2) interpreting what the end-user says about their specific scenario.

For (2), users communicate in plain English and the system responds in kind. To achieve this, we use language models to map what the users say to the structured logic internal to the Reasoning Engine. Here is an example of the customer interacting with the virtual travel agent using conversational language.

This mapping converts plain English to the logical format used by the Reasoning Engine. It is relatively superficial, and this makes it a better fit for LLMs. To avoid any mistakes, we design the system to always confirm its interpretation with the user. That way, if something goes wrong, the user can spot it and correct it. In the image above, the system both restates its understanding and shows it graphically. As language models become more powerful, this functionality doesn’t change; the advancements just mean that the user can say things more flexibly and be understood a higher percentage of the time, all while requiring less training data.

We also use language models to help (1): encode the general knowledge about the use-case in the Reasoning Engine. To facilitate this, we have developed a precise, logical form of English which we call the Cogent language. While it is understandable to English speakers, it is also executable code for the Reasoning Engine. Expressing general knowledge about a use-case in Cogent is all it takes to power the logical reasoning of the application.

We use language models to help translate from more open-ended plain English into the Cogent language. This is analogous to using an LLM to generate code (e.g., GitHub Co-Pilot), except here the “code” is not Java or Python, but the logical English of the Cogent language. Here is an excerpt of the result, showing parts of the above rule translated into Cogent.

It is easy for a human expert to validate the accuracy of the translation: they just need to read the Cogent English and confirm that it captures the intended meaning. There is no need to be an expert in any programming language. Once the Reasoning Engine has the Cogent model, it will always apply that general knowledge correctly when reasoning about individual end-user scenarios.

For many important use-cases, we need computers to be at least as reliably correct as human experts. Language models are a poor fit for such use-cases, because they are fundamentally difficult to trust when it comes to correctness. But they still excel at fluently manipulating language, and for this reason they can be used as part of an expert automation solution. EC’s hybrid AI uses language models as an interface around a core logical Reasoning Engine. This allows the EC platform to support products that are both fluent and correct.

The round-the-world ticket use-case was just one example. EC’s Cogent platform works in any domain. Does it sound like it might help with a problem your organization is facing?

![]()

Developer of a generative artificial intelligence based technology platform designed to empower human decision-making. The company applies large language models (LLMs) in combination with a variety of other AI (artificial intelligence) techniques, enabling users to accelerate and improve critical decision-making for complex, high-value problems where trust, accuracy, and transparency matter.

Recent articles:

AI for when you can’t afford to be wrong

See how Elemental Cognition AI can solve your hard business problems.